DRAM vs. NAND: Understanding the 2026 AI Memory Bottleneck

2/8/2026

The AI revolution isn't just hungry for compute power—it's starving for memory. In 2026, DRAM prices have surged over 170% year-over-year, NAND production is sold out through 2027, and memory has become the critical bottleneck for everything from data centers to your next smartphone. Here's what investors need to know about the memory supercycle—and how to position your portfolio accordingly.

What Is the 2026 AI Memory Bottleneck?

The semiconductor industry is experiencing what analysts are calling a "structural crisis"—and this time, it's not about processors or GPUs. It's about memory.

According to TrendForce's latest February 2026 analysis, conventional DRAM contract prices are now expected to surge by 90-95% quarter-over-quarter in Q1 2026—nearly double their initial forecast of 55-60%. PC DRAM specifically is projected to increase by over 100% QoQ, setting a new record for the largest quarterly price surge ever recorded. NAND Flash prices are following suit, with contract prices expected to rise 55-60% QoQ.

This isn't a typical cyclical shortage. Unlike the 2020-2023 chip crisis caused by pandemic supply chain disruptions, the current memory shortage stems from a permanent structural reallocation of manufacturing capacity. The world's three largest memory manufacturers—Samsung Electronics, SK Hynix, and Micron Technology—are deliberately shifting wafer production away from consumer memory toward high-margin AI infrastructure products.

As IDC analysts put it: "This is a zero-sum game. Every wafer allocated to an HBM stack for an Nvidia GPU is a wafer denied to the LPDDR5X module of a mid-range smartphone or the SSD of a consumer laptop."

The numbers tell a stark story. Throughout 2025, DRAM prices rose 172%. DDR5 spot prices have quadrupled since September 2025. According to the Bloomsbury Intelligence and Security Institute, this represents the most severe memory price escalation in the industry's history, with DRAM and NAND prices doubling in a single month during the crisis's peak.

The "Memory Wall" Has Arrived

For decades, computing performance was limited primarily by processor speed. Moore's Law pushed transistor density ever higher, delivering predictable performance improvements. But as the industry enters the AI era, a new constraint has emerged: the Memory Wall.

The Memory Wall describes the growing gap between processor speed and memory bandwidth. Modern AI accelerators can perform trillions of operations per second, but they're only useful if data can be fed to them fast enough. When NVIDIA CEO Jensen Huang unveiled the Vera Rubin platform at CES 2026, he emphasized that memory bandwidth—not compute—is now the primary bottleneck for large-scale AI systems.

This fundamental shift has transformed memory from a commodity component into a strategic asset that determines AI system performance, pricing, and market structure.

DRAM vs. NAND vs. HBM: What's the Difference?

Before diving into the investment implications, it's essential to understand the three types of memory driving this market. If you're new to analyzing stocks, grasping these fundamentals will help you evaluate memory companies more effectively.

How DRAM Works (and Why AI Needs So Much)

DRAM (Dynamic Random Access Memory) is the "working memory" of computing devices. It stores data that processors need to access quickly and frequently—think of it as a computer's short-term memory. DRAM is volatile, meaning it loses all data when power is cut.

The technology works by storing each bit of data in a tiny capacitor within an integrated circuit. Because capacitors naturally leak charge over time, DRAM must be "refreshed" thousands of times per second—hence the "Dynamic" in its name. This refresh requirement consumes power but enables extremely fast access times measured in nanoseconds.

Key characteristics of DRAM:

- Speed: Very fast read/write access (nanoseconds)

- Use cases: PCs, smartphones, servers, data centers

- Cost: More expensive per gigabyte than NAND

- Market size: ~$130 billion in 2025, projected to exceed $200 billion by 2028

- Key players: Samsung (34% market share), SK Hynix (36%), Micron (26%)

AI applications are particularly DRAM-hungry because large language models (LLMs) must keep their entire working state—context windows, key-value caches, intermediate activations, and routing decisions—in active memory during inference. A single AI server can require hundreds of gigabytes of DRAM. When an AI model generates a response, it isn't simply retrieving static information—it maintains a live working state that must remain accessible in real-time.

To illustrate the scale: NVIDIA's new Vera Rubin NVL72 rack contains 54 TB of LPDDR5X memory connected to its Vera CPUs alone—and that's in addition to its HBM. This massive memory footprint explains why hyperscalers are absorbing an ever-larger share of global DRAM production.

What Is NAND Flash? (Storage vs. Active Memory)

NAND Flash is non-volatile storage memory—it retains data even without power. You'll find NAND in SSDs (solid-state drives), USB drives, memory cards, and smartphone storage. While slower than DRAM, NAND offers much higher density and lower cost per gigabyte, making it ideal for persistent data storage.

The technology stores data by trapping electrons in floating-gate transistors arranged in a "NAND" logic configuration (the name derives from the Boolean logic gate). Modern 3D NAND stacks these transistors vertically—sometimes over 200 layers high—to achieve remarkable storage density.

Key characteristics of NAND:

- Speed: Slower than DRAM (microseconds vs. nanoseconds)

- Use cases: SSDs, data center storage, smartphones, cameras

- Cost: Significantly cheaper per gigabyte than DRAM

- Types: SLC (highest endurance), MLC, TLC, QLC (highest density, lowest cost)

- AI role: Stores training datasets, model checkpoints, vector databases, retrieval indexes, logs, and KV caches when sessions idle

Enterprise SSDs are becoming the largest NAND Flash application segment in 2026 as AI data centers scale their storage infrastructure. Phison's CEO confirmed that all NAND production for 2026 is already sold out, with shortages expected to persist through 2027. The 1TB TLC NAND chip that cost $4.80 in July 2025 now costs $10.70—more than doubled in six months.

At first glance, NAND might seem less critical than DRAM for AI workloads since SSDs are far slower and don't participate in real-time token generation. However, large-scale AI systems cannot operate without substantial NAND storage. Training datasets, model checkpoints, vector databases for retrieval-augmented generation (RAG), and log storage all rely on NAND as the only technology that can deliver the required capacity at sustainable cost.

HBM: The Specialized Memory Powering AI GPUs

HBM (High Bandwidth Memory) is a specialized form of DRAM designed specifically for AI accelerators and high-performance computing. Unlike traditional DRAM that sits on a circuit board, HBM stacks multiple DRAM dies vertically using through-silicon vias (TSVs), then mounts them directly on an interposer alongside the GPU or processor.

The technology represents a fundamental rethinking of memory architecture. Traditional DRAM achieves higher speeds primarily through faster clock frequencies, but this approach faces physical limits: signal integrity issues on long traces, power consumption, and thermal constraints. HBM takes a different approach—instead of faster clocks, it uses a massively parallel interface. While DDR5 uses a 64-bit data bus, HBM uses a 1024-bit (and HBM4 uses 2048-bit) interface, allowing huge amounts of data to move in parallel.

Key characteristics of HBM:

- Bandwidth: HBM3E delivers ~1.2 TB/s; HBM4 delivers up to 2.6 TB/s per stack

- Architecture: 3D-stacked (8-16 DRAM dies), wide interface (1024-2048 bits)

- Power efficiency: Lower energy per bit due to shorter data paths and lower voltage operation

- Cost: ~5x more expensive than standard DRAM per gigabyte

- Wafer usage: Each gigabyte of HBM consumes roughly 3x the wafer capacity of DDR5

- Generations: HBM (2015) → HBM2 (2016) → HBM2E (2020) → HBM3 (2023) → HBM3E (2024) → HBM4 (2026)

HBM is the critical component enabling AI chips like NVIDIA's H100, H200, Blackwell, and the upcoming Vera Rubin platforms. The HBM total addressable market is projected to reach $100 billion by 2028, up from $35 billion in 2025—growing at a 40% compound annual rate.

HBM4: The Next Generation

The latest generation, HBM4, represents a significant leap forward. NVIDIA's Vera Rubin GPU—announced at CES 2026—exclusively uses HBM4, with each GPU package containing eight stacks delivering 288GB of capacity and 22 TB/s of bandwidth. That's 2.75x more bandwidth than the 8 TB/s offered by HBM3E in Blackwell GPUs.

HBM4 achieves this through a doubled interface width (2048 bits vs. 1024 bits) and data transfer rates exceeding 10 Gbps per pin. The JEDEC standard specifies rates starting at 6.4 Gbps, but NVIDIA pushed suppliers for 13 Gbps or higher, driving last-minute redesigns that briefly delayed qualification timelines.

Perhaps most importantly, HBM4 introduces a logic base die—essentially a small processor at the bottom of the memory stack. This enables custom functionality to be integrated directly into the memory, opening possibilities for custom AI-specific optimizations. We're entering an era of "Custom HBM" where AI giants can request specific IP blocks integrated into their memory purchases.

| Specification | DRAM (DDR5) | NAND Flash | HBM3E | HBM4 |

|---|---|---|---|---|

| Primary Use | Working memory | Storage (SSDs) | AI accelerators | Next-gen AI accelerators |

| Bandwidth | ~50-100 GB/s | ~7-14 GB/s | ~1.2 TB/s | ~2.2-2.6 TB/s |

| Interface Width | 64-bit | N/A | 1024-bit | 2048-bit |

| Volatility | Volatile | Non-volatile | Volatile | Volatile |

| Cost/GB | $$ | $ | $$$$ | $$$$$ |

| 2026 Price Trend | +90-100% QoQ | +55-60% QoQ | +20% (stabilizing) | Premium pricing |

Why Is AI Causing a Memory Shortage?

The memory shortage isn't simply about increased demand—it's about a fundamental restructuring of how the world's memory capacity is allocated. Understanding this dynamic is crucial for investors looking to position themselves in the semiconductor sector.

Hyperscaler Spending Has Exploded

Global data center equipment and infrastructure spending hit approximately $290 billion in 2024, with hyperscaler capital expenditure approaching $600 billion in 2026—a 36% year-over-year increase. The scale of individual projects is unprecedented:

- OpenAI's Stargate project signed agreements with Samsung and SK Hynix for up to 900,000 DRAM wafers per month—representing up to 40% of global DRAM output. The deal involves the supply of undiced wafers rather than packaged chips to streamline logistics for the massive data center build-out.

- Amazon announced $200 billion in capital spending for 2026, primarily for AI infrastructure.

- Microsoft, Google, Meta, and Amazon have placed open-ended orders, accepting "as much supply as available regardless of cost."

- TSMC announced a staggering $52-56 billion capex budget for 2026, up from $40 billion in 2025.

According to TrendForce, data centers will consume over 70% of high-end memory chips produced in 2026. A 2024 McKinsey analysis projected that global demand for AI-ready data center capacity would grow at approximately 33% annually through 2030, with AI workloads consuming roughly 70% of total data center capacity by the decade's end.

The NVIDIA Vera Rubin Effect

NVIDIA's announcement of the Vera Rubin platform at CES 2026 crystallized the scale of memory demand. Each Vera Rubin NVL72 rack contains:

- 72 Rubin GPUs with 336 billion transistors each

- 20.7 TB of HBM4 delivering 1.6 PB/s of bandwidth

- 54 TB of LPDDR5X connected to 36 Vera CPUs

- 3.6 exaFLOPS of inference performance

To put this in perspective: a single Vera Rubin rack requires more memory than entire data centers did just a few years ago. And hyperscalers are ordering these racks by the thousands. AWS, Google Cloud, Microsoft, and Oracle Cloud are all listed as early 2026 deployment partners.

The Rubin GPU's 22 TB/s of memory bandwidth per socket—achieved through eight stacks of HBM4—is 2.75x higher than Blackwell. NVIDIA initially targeted 13 TB/s when it first teased Rubin, but achieved 22 TB/s entirely through silicon improvements without relying on compression techniques.

Capacity Reallocation to HBM

Here's the core problem: memory manufacturers are actively shifting production capacity away from commodity DRAM and NAND toward high-margin HBM. As Micron's business chief Sumit Sadana explained to CNBC: "When Micron makes one bit of HBM memory, it has to forgo making three bits of more conventional memory for other devices."

The economics are compelling for manufacturers:

- Samsung: Earning approximately 60% margins on HBM vs. ~40% on commodity DRAM (Q3 2025)

- SK Hynix: HBM accounted for 77% of overall revenues in recent quarters

- Micron: Exited its consumer "Crucial" brand entirely to redirect capacity to enterprise and AI customers

Samsung has expanded its 1c DRAM capacity to target 60,000 wafers per month specifically for HBM4 production. SK Hynix is completing its M15X fab, investing over 20 trillion won for HBM3E and HBM4 production. New fabs take 3+ years to build, meaning meaningful supply relief won't arrive until 2027-2028 at the earliest.

The Zero-Sum Wafer Game

Global cleanroom capacity is finite. Every wafer allocated to HBM production is a wafer not available for DDR5 modules or smartphone LPDDR5X. IDC's analysis describes this as "a potentially permanent, strategic reallocation of the world's silicon wafer capacity."

The problem is compounded by HBM's manufacturing complexity:

- HBM requires significantly more lithography steps, testing, and expensive high-density substrates

- Advanced packaging capacity (TSMC's CoWoS, for example) is also constrained

- ABF substrate lead times have lengthened as high-end GPUs and HBM modules compete for limited output

The result? Supply growth for standard DRAM and NAND is expected to be well below historical norms in 2026. IDC projects:

- DRAM supply growth: ~16% YoY (vs. 20-25% historically)

- NAND supply growth: ~17% YoY

- DRAM demand growth: ~35%

- NAND demand growth: 20-22%

How Are Memory Prices Expected to Move in 2026?

For investors evaluating memory stocks, understanding the price trajectory is essential. These pricing dynamics directly impact revenue and margins for memory manufacturers. You can track real-time sector movements on our sector performance dashboard.

DRAM: Historic Price Surge

According to the latest TrendForce data (February 2026), previous forecasts have been dramatically revised upward:

- Conventional DRAM: +90-95% QoQ in Q1 2026 (revised from initial 55-60% forecast)

- PC DRAM: +100%+ QoQ (largest quarterly increase on record)

- Server DRAM: +60%+ QoQ

- Mobile DRAM (LPDDR4X/5X): +90% QoQ (steepest increases ever recorded)

For context, DRAM prices rose 172% throughout 2025. DDR5 spot prices have quadrupled since September 2025. A 16GB DDR5 chip that cost $6.84 in September 2025 was priced at $27.20 by December—and prices have continued rising.

TeamGroup's General Manager warned that a 16GB memory module now costs approximately $217-228 in components alone—before manufacturer premiums, logistics, or taxes. Enthusiasts are reporting that 32GB DDR5 6000 CL30 RAM kits that cost $99 in March 2025 are now selling for $297 from the same vendors—exactly triple the price in just eight months.

Gartner forecasts DRAM prices to increase by 47% in 2026 overall due to significant undersupply in both traditional and legacy DRAM markets. Morgan Stanley now expects DRAM prices to jump 62% in 2026, while NAND prices could surge 75%.

NAND: Enterprise Demand Dominates

NAND pricing is following DRAM upward, driven primarily by enterprise SSD demand:

- NAND Flash contracts: +55-60% QoQ in Q1 2026 (revised from 33-38%)

- Client SSDs: +40%+ QoQ (steepest increase among NAND products)

- Enterprise SSDs: SanDisk reportedly planning +100% price increases for Q1 2026

- Enterprise 30TB TLC SSDs: +257% between Q2 2025 and Q1 2026

The 1TB TLC NAND chip that cost $4.80 in July 2025 now costs $10.70—more than doubled in six months. Kioxia's CEO declared that "the days of cheap 1TB SSDs for around $45 are over."

NVIDIA's Vera Rubin platform is contributing to enterprise NAND demand through its Inference Context Memory Storage Platform (ICMSP). Each Vera Rubin NVL72 rack pairs a BlueField-4 DPU with a 512GB SSD per compute tray, totaling 9.2 TB of NAND per rack. Assuming NVIDIA ships 50,000 racks annually, that's roughly 0.5 exabytes of NAND demand from this single product line.

Timeline for Relief

Industry experts don't expect meaningful supply relief until 2027-2028:

- 2026: Tight supply continues; prices remain elevated. New fabs under construction but not yet producing.

- 2027: New fab capacity begins coming online (Micron Idaho, SK Hynix M15X in May 2026 pilot operations, ramping through 2027)

- 2028: Samsung P5 facility in Pyeongtaek operational; potential price stabilization

- 2030: Micron's New York megafab expected online—potentially the largest semiconductor facility in the U.S.

Phison's CEO has warned that NAND could face "severe shortages for the next ten years" as manufacturers remain cautious about capacity expansion after previous boom-bust cycles. "Two reasons," he explained. "First, every time flash makers invested more, prices collapsed, and they never recouped their investments."

How Will the Memory Shortage Impact Consumer Electronics?

The memory shortage isn't just an enterprise problem—it's rippling through to smartphones, PCs, gaming consoles, and virtually every consumer electronic device. Understanding this impact helps contextualize both the market opportunity and the broader economic implications.

Smartphones: The Low-End Squeeze

According to Counterpoint Research, global smartphone shipments are expected to decline 2.1% in 2026 as surging component costs impact demand. The firm has revised its 2026 forecast down by 2.6 percentage points, with Chinese OEMs seeing the biggest downward revisions.

The impact by price segment:

- Low-end smartphones: BoM costs up ~25% from DRAM alone. Base models likely returning to 4GB RAM in 2026—a regression from recent progress.

- Mid-range smartphones: BoM costs up ~15%. OEMs forced to raise launch prices and modify pricing of existing models.

- High-end smartphones: BoM costs up ~10%. Even Apple may reevaluate pricing strategies; memory share in iPhone BoM expected to increase significantly in Q1 2026.

Counterpoint expects further cost impacts in the 10-15% range through Q2 2026, with average smartphone selling prices revised up 6.9% YoY (from an initial 3.6% forecast).

TrendForce notes that smaller smartphone brands are "increasingly struggling to obtain enough resources due to tight memory supply, indicating possible industry consolidation. Larger companies are expanding their market share, while smaller competitors risk being pushed out."

PCs and Laptops: The 15-20% Price Hike

Major PC vendors—Lenovo, Dell, HP, Acer, and Asus—have warned of 15-20% price hikes from the second half of 2026. IDC projects:

- Moderate scenario: PC market contracts 4.9% (vs. initial 2.4% decline forecast); ASPs rise 4-6%

- Pessimistic scenario: PC market contracts 8.9%; ASPs rise 6-8%

Dell Technologies COO Jeff Clarke stated during an analyst call that the company had "never witnessed costs escalating at the current pace," describing tighter availability across DRAM, hard drives, and NAND flash memory. Analysts at Morgan Stanley downgraded Dell from "Overweight" to "Underweight" citing heavy exposure to rising server memory costs.

DRAM and NAND currently make up 10-18% of a notebook's BoM cost. This share is expected to surpass 20% in 2026 as memory prices climb sharply over several consecutive quarters.

High-end ultrathin notebooks, which often have mobile DRAM soldered directly onto the motherboard, are particularly vulnerable since they cannot reduce costs by lowering specifications or replacing modules.

"Shrinkflation" in Consumer Electronics

When manufacturers can't raise prices, they often quietly reduce specifications—a practice called "shrinkflation." Consumer Reports warns that "the $600 laptop you buy in 2026 might look identical to the 2025 model, but under the hood it may have a dimmer screen and 8GB of RAM instead of 16GB."

Counterpoint Research notes that in some smartphone models, "we are seeing downgrades of components like camera modules, displays, and audio components" to offset memory costs while maintaining price points.

IDC expects that 2026 could be "one of the most expensive years ever for consumer electronics," with the effects extending beyond computers to TVs, tablets, smart-home devices, and even automobiles.

Which Memory Stocks Stand to Benefit?

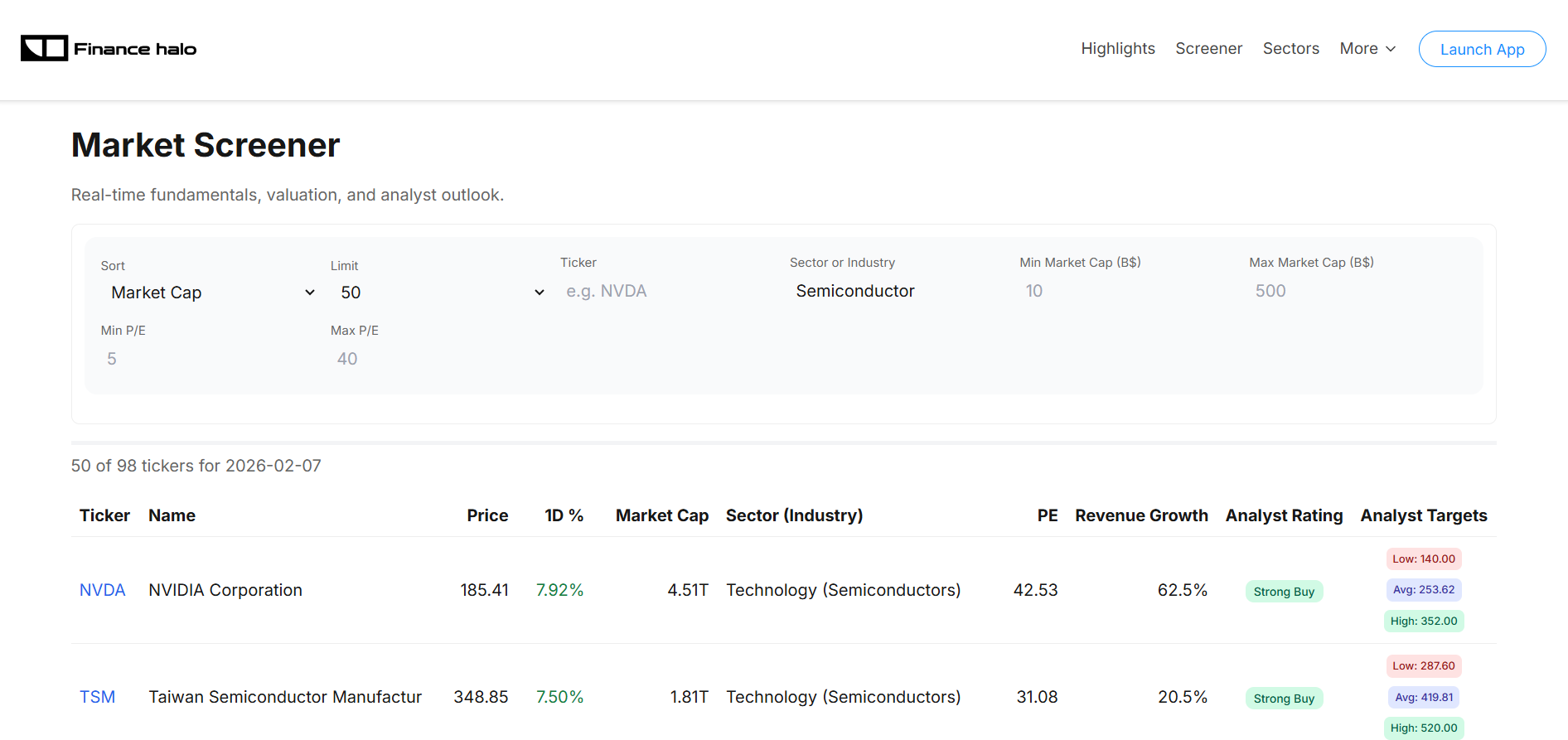

The memory supercycle creates compelling opportunities for investors who understand how to evaluate semiconductor companies. Our market screener can help you filter technology stocks by key metrics like P/E ratio and market cap.

Micron Technology (MU) — Pure-Play AI Memory

Micron is the only major U.S.-based pure-play memory company, making it a popular choice for investors seeking direct exposure to the AI memory boom without Korean exchange access.

Recent Performance:

- Stock up ~247% over the past year; hit all-time high of $455.50 on January 30, 2026

- Q1 FY2026 revenue: $13.64 billion (+56% YoY)

- EPS: $4.60 (+175% YoY)

- Gross margin: 56%

- Q2 FY2026 guidance: $18.7 billion revenue (~37% sequential growth), ~67% gross margin, EPS ~$8.19

- Full-year FY2026 capex: ~$20 billion, weighted to second half

Investment Thesis:

- Entire HBM production for 2026 is sold out with pricing and volume agreements finalized, including early HBM4

- HBM4 performance exceeds 11 Gbps—outperforming JEDEC specifications and NVIDIA's operating targets

- Planning to lift HBM4 capacity to 15,000 wafers per month in 2026 (~30% of total HBM output)

- Breaking ground on massive New York megafab in January 2026—could eventually house four fabs

- Trading at ~7-11x forward earnings despite triple-digit growth

- Manufacturing leadership: four consecutive DRAM nodes and three NAND nodes

Analyst Consensus: Strong Buy (35 Buy, 4 Hold, 2 Sell out of 54 analysts). Average price target: $317-350. High target: $500 (Rosenblatt). Citi expects DRAM pricing to increase every quarter in 2026, driving further upside to consensus estimates.

Key Risk: Recent reports suggest NVIDIA may bypass Micron for HBM4 orders in favor of SK Hynix and Samsung for its Vera Rubin platform. Semianalysis projects SK Hynix at 70% HBM4 market share and Samsung at 30%, with no sign of Micron orders. However, Micron has confirmed customer contracts and strong feedback on its 12-high HBM3E.

To dig deeper into Micron's technicals, you can analyze MU with Finance Halo's AI assistant.

SK Hynix — HBM Market Leader

SK Hynix is the undisputed leader in HBM, commanding approximately 50-62% market share depending on the metric. The company's stock has risen over 200% in 2025, making it one of the top performers in the Asian technology sector.

Key Strengths:

- First to market with HBM3E; completed HBM4 development and ready for mass production

- Primary supplier to NVIDIA—secured over two-thirds of HBM supply orders for Vera Rubin

- UBS predicts ~70% market share in HBM4 market for NVIDIA's Rubin platform

- Entire DRAM, HBM, and NAND capacity sold out through 2026

- Overtook Samsung in DRAM revenue for first time since 1992, capturing 36% of market vs. Samsung's 34%

- First HBM3E supplier for Google's latest TPUs (v7p and v7e)

- HBM4 offers 40% improvement in power efficiency and data rates of 10 Gbps

Valuation: P/E ratio of ~17, significantly lower than Micron's. Morgan Stanley boosted 2026-2027 earnings forecasts by 56-63%. Market capitalization more than doubled over 2025, surpassing 150 trillion won.

Capacity Expansion: SK Hynix is set to complete the first clean room at its M15X fab in May 2026 and begin pilot operations. The facility—with over 20 trillion won invested—will manufacture both HBM3E and HBM4, plus 10nm-class 6th-generation (1c) DRAM lines for HBM4E.

Risks: Listed only on Korean exchanges (KOSPI), limiting accessibility for some investors. Geopolitical exposure to China trade restrictions. CEO Kwak Noh-Jung acknowledged that "the business environment in 2026 would be tougher than last year" despite strong positioning.

Samsung Electronics — Diversified Memory Giant

Samsung remains the world's largest memory manufacturer by overall capacity, though it had fallen to third place in HBM (17% share in Q2 2025). The company is staging a dramatic comeback with HBM4.

Recent Developments:

- First to ship HBM4 to NVIDIA—production began February 2026 at Pyeongtaek campus

- Passed all NVIDIA qualification tests; also qualified for AMD

- Co-CEO Jun Young-hyun declared "Samsung is back" after HBM4 success; customers stated HBM4 shows "differentiated competitiveness"

- Planning 50% HBM capacity expansion in 2026 to ~250,000 wafers per month

- Stock up ~16% YTD in 2026; shares rose 2.2% on HBM4 qualification news while SK Hynix fell 2.9%

- Using 6th-generation 10nm-class DRAM and 4nm logic base die; HBM4 speeds up to 11 Gbps (vs. 8 Gbps JEDEC standard)

Investment Thesis: More diversified than pure-memory plays, with exposure to smartphones, displays, and foundry services. Samsung's "turnkey" approach—leveraging its unique position as the only company housing a leading-edge foundry, memory division, and advanced packaging house under one roof—offers a differentiated value proposition.

Counterpoint Research projects Samsung's HBM market share could exceed 30% in 2026—nearly doubling from its Q2 2025 position. This comeback story represents both risk and opportunity.

Valuation: Attractive relative valuation (~12x P/E) compared to SK Hynix, offering potential upside if HBM4 momentum continues.

| Company | 2026 YTD Return | HBM Market Share | P/E Ratio | Key Catalyst |

|---|---|---|---|---|

| Micron (MU) | +16% | ~21% → uncertain | ~7-11x fwd | New fab capacity, HBM4 ramp |

| SK Hynix | +11% | ~50-62% | ~17x | NVIDIA Vera Rubin orders |

| Samsung | +16% | ~17% → 30%+ | ~12x | HBM4 comeback, first to ship |

Semiconductor Equipment: The Picks-and-Shovels Play

For investors seeking exposure to the memory supercycle with potentially lower volatility than memory producers, semiconductor equipment makers offer a "picks-and-shovels" approach. These companies benefit from capacity expansion regardless of which memory maker wins market share.

The Equipment Winners

Bank of America expects semiconductor equipment stocks to outperform in 2026 "as upstream beneficiaries of capacity expansions and tech upgrades to support multi-year AI infra demand." The firm sees "upside in C2H'26 and CY27 as visibility into capex plans and fab ramp timelines improves."

ASML Holding (ASML): The Dutch lithography giant holds a near-monopoly on EUV (extreme ultraviolet) lithography equipment essential for advanced memory nodes. ASML hit a $500 billion market cap in early 2026 after TSMC's record earnings and capex guidance. The shift to HBM4 and advanced DRAM nodes (1c, 1d) requires more EUV layers than ever before, making ASML's tools essential infrastructure.

Lam Research (LRCX): Leading supplier of etch and deposition equipment critical for memory manufacturing. Lam Research is seeing "insatiable" demand for its etching tools used in HBM4 production. The stock has exploded 192% over the past year with 62% return on equity and 44% YoY earnings growth in Q1 2026.

Applied Materials (AMAT): Broad semiconductor equipment exposure across deposition, etch, and advanced packaging. AMAT is benefiting from the industry's shift toward "Gate-All-Around" (GAA) transistor architectures. Bank of America expects etch/dep (AMAT/LRCX) could expand to 42% of wafer fab equipment market share, up 3% since 2023.

KLA Corporation (KLAC): Process control and yield management equipment—critical as HBM manufacturing complexity increases. Bank of America analyst Vivek Arya called KLA a "best-in-breed stock" and has never downgraded it from a Buy rating. The firm expects process control to gain 100 bps of share to 13% of WFE in 2026.

Capex Driving Equipment Demand

The equipment opportunity is underpinned by massive capex commitments:

- TSMC: $52-56 billion capex for 2026 (up from $40 billion in 2025)

- Micron: ~$20 billion capex in FY2026

- Samsung: 50% HBM capacity expansion planned

- SK Hynix: M15X fab with >20 trillion won investment

Bank of America forecasts 2026 to feature "another ~30% growth towards the first $1 trillion for semiconductor sales, supported by nearly double-digit YoY wafer fab equipment (WFE) sales growth."

Free cash flow margins at AMAT, LRCX, and KLAC have expanded from below 15% in 2023 to over 20%, with Bank of America projecting FCF margins of 20-30% through 2028.

What Are the Risks for Memory Investors?

Memory stocks have historically been among the most cyclical in the semiconductor industry. Understanding the risks is just as important as understanding the opportunity. When evaluating any stock, it's wise to consider valuation metrics like P/E ratio in the context of the industry cycle.

Cyclicality of Memory Markets

Memory markets have historically followed brutal boom-bust cycles. As TechInsights analyst Jim Handy noted, "For every story you read about demand going gangbusters, there's a corresponding story about the AI bubble." Memory manufacturers remember the severe downturns of 2008 and 2018, which is why they've been cautious about capacity expansion.

If AI demand slows or the "AI bubble" deflates, memory prices could correct sharply, compressing margins and earnings for all three major players. Seeking Alpha analysts warn that Micron's "forward multiple appears elevated due to peak-cycle profits; as supply catches up, the stock may face multiple compression and earnings decline."

Potential Oversupply by 2027-2028

Multiple new fabs are scheduled to come online between 2027-2030:

- Micron Idaho fab (2027-2028)

- Micron New York megafab (2030)

- SK Hynix M15X (mid-2027 utilization)

- Samsung P5 Pyeongtaek (2028)

If demand growth slows while supply expands, the market could swing from shortage to oversupply. Some analysts anticipate Micron's earnings and margins will peak in 2026 or early 2027, with a likely downturn thereafter.

Samsung's HBM4 Comeback Threat

Samsung's successful HBM4 qualification for NVIDIA represents a potential market share shift that could disrupt the competitive landscape. If Samsung aggressively prices HBM4 to regain share, it could compress margins industry-wide.

Counterpoint Research projects Samsung's HBM market share could exceed 30% in 2026—nearly doubling from its current position. SK Hynix CEO acknowledged that "the business environment in 2026 would be tougher than last year" as competition intensifies.

Geopolitical Risks

U.S. export controls on HBM to China have prompted Chinese retaliation targeting critical minerals including gallium, germanium, and rare earth elements. Both SK Hynix and Samsung have significant operations in China that face regulatory pressure.

The Bloomsbury Intelligence report notes that "defence systems, ranging from fighter jets to satellite communications, rely on the same DRAM supply chains that are currently facing constraints"—adding national security dimensions to commercial concerns.

Chinese semiconductor foundry SMIC warned that escalating fears around impending shortages are leading their customers to hold back on 2026 planning as they navigate uncertainty.

Valuation Concerns

While memory stocks appear cheap on forward P/E ratios, these multiples reflect peak-cycle earnings. Seeking Alpha analysts note that "Micron's forward multiple appears elevated due to peak-cycle profits."

When evaluating growth-adjusted valuations, consider the PEG ratio to assess whether current prices adequately reflect cycle risk. For a comprehensive comparison of these approaches, see our guide on P/E ratio vs. EPS vs. PEG.

How Can Investors Play the Memory Supercycle?

There are several approaches to gaining exposure to the memory shortage, depending on your risk tolerance and investment objectives.

Direct Memory Plays

Micron (MU) offers the most direct U.S.-listed exposure to the memory market. Wall Street analysts expect EPS of $31.20 in 2026—more than four times last year's profit. At current prices, the stock trades at less than 11x forward earnings despite triple-digit growth.

For investors comfortable with Korean exchanges, SK Hynix provides HBM leadership with over 50% market share and secured orders through 2026.

Samsung offers more diversification but less pure memory exposure—though its HBM4 breakthrough makes it increasingly compelling for AI memory plays.

Semiconductor Equipment Makers

Companies that sell equipment to memory fabs benefit from capacity expansion regardless of which memory maker wins market share:

- Lam Research (LRCX): Leading supplier of etch and deposition equipment. Stock up 192% in past year.

- Applied Materials (AMAT): Broad semiconductor equipment exposure. Bank of America target $300.

- KLA Corp (KLAC): Process control and yield management. Bank of America target $1,450.

- ASML: Monopoly on EUV lithography equipment. $500 billion market cap.

Bank of America expects semiconductor equipment stocks to benefit from "nearly double-digit YoY wafer fab equipment sales growth" in 2026, calling this upcycle "more powerful and durable than previous ones."

Adjacent AI Infrastructure Plays

Memory demand is driven by AI accelerators. Companies like NVIDIA and AMD are indirect beneficiaries, though their stocks already reflect AI premium valuations. You can explore NVIDIA's fundamentals by analyzing NVDA with our AI assistant.

NVIDIA's market capitalization crossed $4.5 trillion following TSMC's capex guidance, as investors viewed the foundry's spending as a guarantee of future GPU supply.

Portfolio Allocation Framework

Consider your exposure across the memory value chain:

- High conviction/higher risk: Pure memory plays (Micron, SK Hynix). Direct exposure to memory pricing but highest cyclical risk.

- Moderate risk: Diversified memory + foundry (Samsung). HBM4 upside with diversification buffer.

- Lower volatility: Equipment makers (LRCX, AMAT, KLAC, ASML). Benefit from capex regardless of memory maker market share.

- ETF approach: VanEck Semiconductor ETF may offer better risk-adjusted returns than individual picks.

Conclusion

The 2026 AI memory bottleneck represents one of the most significant structural shifts in the semiconductor industry in decades. Unlike cyclical shortages of the past, this crisis stems from a deliberate reallocation of the world's memory manufacturing capacity toward AI infrastructure—and relief isn't expected until 2027-2028 at the earliest.

Key takeaways for investors:

- Pricing dynamics: DRAM prices are surging 90-100% QoQ in Q1 2026; NAND prices up 55-60%. PC DRAM is seeing its largest quarterly price surge on record.

- The HBM bottleneck: HBM is the critical constraint for AI, consuming 3x the wafer capacity of standard DRAM. Each Vera Rubin rack requires 20.7 TB of HBM4 and 54 TB of LPDDR5X.

- Market leaders: SK Hynix leads HBM with ~50-62% share and secured Vera Rubin orders; Samsung is staging an HBM4 comeback and was first to ship.

- U.S. exposure: Micron offers the most direct U.S.-listed pure-play exposure at compelling valuations (~7-11x forward earnings).

- Picks-and-shovels: Equipment makers (LRCX, AMAT, KLAC, ASML) provide lower-volatility exposure to memory capex.

- Consumer impact: Smartphone and PC prices rising 10-20%; "shrinkflation" reducing specifications in budget devices.

- Cycle risk: Cyclicality remains key—peak earnings likely in 2026-2027 with potential downturn as new capacity comes online.

Memory has transformed from a commodity component into a strategic asset critical for AI performance. For investors willing to navigate the cyclical risks, the memory supercycle offers compelling opportunities—but position sizing and risk management remain essential. As one industry observer put it: "For the first time in decades, memory isn't just another line on the BOM. In the age of AI, it is the bottleneck."

Try it yourself: Analyze Micron with Finance Halo's AI assistant—get instant price targets, technical analysis, and fundamental insights on any memory stock in seconds.

Disclaimer: This article is for educational purposes only and does not constitute investment advice. Memory stocks are highly cyclical and can experience significant volatility. Always do your own research and consider your risk tolerance before making investment decisions.